The Issue

Assume we must copy a table that contains a LOB. There can be different reasons for this. For example, if the table is too fragmented or if there is no more space in the current tablespace.

The straightforward approach with Insert / * + append parallel (n) enable_parallel_dml * / simply to copy the data fails, however. It takes a long time at first. You can see that the time is spent inserting and not reading. How you copy the LOBS is crucial. The rest of the time is comparatively negligible.

The Insert .. Select approach has another problem: There are no partial results. Either you succeed or you have to start all over again.

Searching the Internet I found a blog by Marek Läll that deals with the subject of copying LOBs [1].

The core of the matter is that the LOB locator (LOB address) has not yet been determined at the time of the insert. A new LOB must be created, which means you also need a new locator. As a result, the LOB to be copied is first written to the TEMP tablespace. Then the new locator is determined and then the LOB is copied again from the TEMP tablespace to the actual target. Läll now suggests another way: First an empty LOB is written, its locator is then read via SELECT .. FOR UPDATE. The source LOB can be copied there in one go. This saves you from writing and reading the LOB, which is important. As already mentioned, the most important thing for efficiency is how the LOBs are treated.

I implemented the code in PL / SQL and optimized it a bit, rewritten it to BULK and avoided the SELECT .. FOR UPDATE via the returning clause.

The code is very efficient compared to other approaches I tested. In my real application, I can easily get to 1.5 million rows / hour with a parallel 24

Copy Part

To illustrate my code let us assume this simple table:

| CREATE table doc_table( doc_id Number, |

| document BLOB); |

The table has a BLOB column called document.

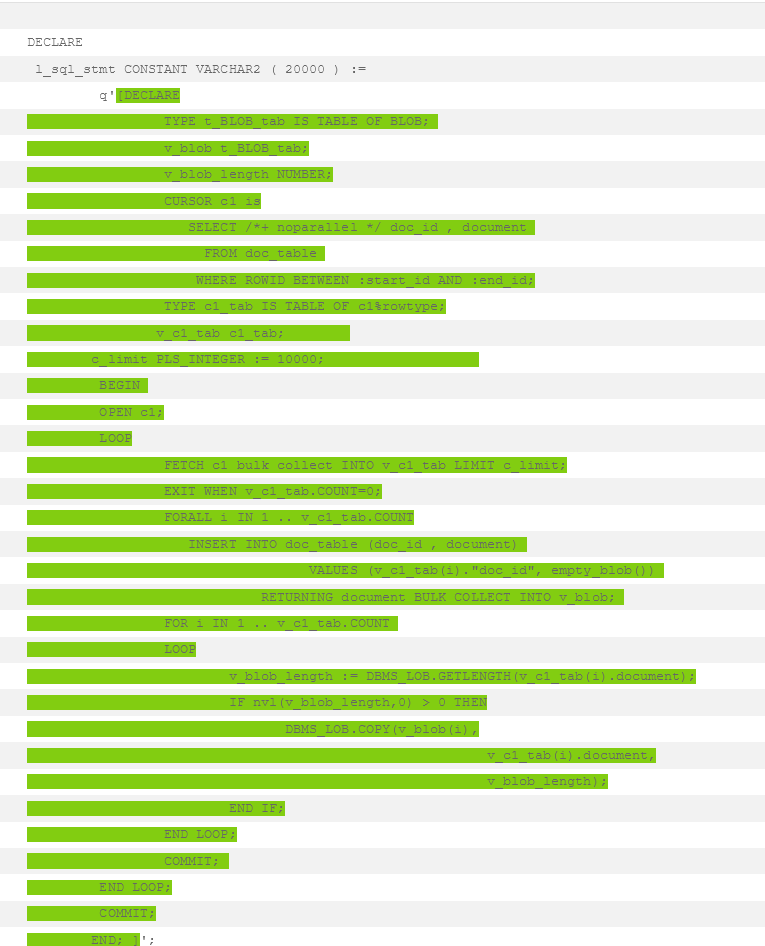

DECLARE |

TYPE t_BLOB_tab IS TABLE OF BLOB; |

v_blob t_BLOB_tab; |

v_blob_length NUMBER; |

CURSOR c1 is |

SELECT /*+ noparallel */ doc_id , document -- 1: noparallel hint |

FROM doc_table |

WHERE ROWID BETWEEN :start_id AND :end_id; -- 2: Start and End Rowid |

TYPE c1_tab IS TABLE OF c1%rowtype; |

v_c1_tab c1_tab; |

c_limit PLS_INTEGER := 10000; |

BEGIN |

OPEN c1; |

LOOP |

FETCH c1 bulk collect INTO v_c1_tab LIMIT c_limit; |

EXIT WHEN v_c1_tab.COUNT=0; |

FORALL i IN 1 .. v_c1_tab.COUNT |

INSERT INTO doc_table_new (doc_id , document P) -- 3: Conventional Insert |

VALUES (v_c1_tab(i).doc_id, empty_blob()) |

RETURNING document BULK COLLECT INTO v_blob; -- 4: Loblocator of new LOB |

FOR i IN 1 .. v_c1_tab.COUNT |

LOOP |

v_blob_length := DBMS_LOB.GETLENGTH(v_c1_tab(i).document); |

IF nvl(v_blob_length,0) > 0 THEN -- 5: DBMS_LOB.COPY will throw an exception |

DBMS_LOB.COPY(v_blob(i), -- for empty LOBS |

v_c1_tab(i).document, |

v_blob_length); |

END IF; |

END LOOP; |

COMMIT; |

END LOOP; |

COMMIT; |

END; |

/ |

Annotations:

1. The anonymous block is parallelized in the next step via DBMS_PARALLEL_EXECUTE. It would be illogical to parallelize again within a parallel process.

2. The start and end id must be used in the central select. They must not be declared, they are set from the DBMS_PARALLEL_EXECUTE framework.

3. A direct path write would be possible here using the APPEND_VALUES hint. I refrained from it so as not to provoke an exclusive table lock. I have not tested whether this actually would be the case. I am so satisfied with the performance of the solution described here that I consider a test to be unnecessary.

4. The return saves a SELECT .. FOR UPDATE.

5. DBMS_LOB.COPY is the fastest way to copy and seems to use a direct path.

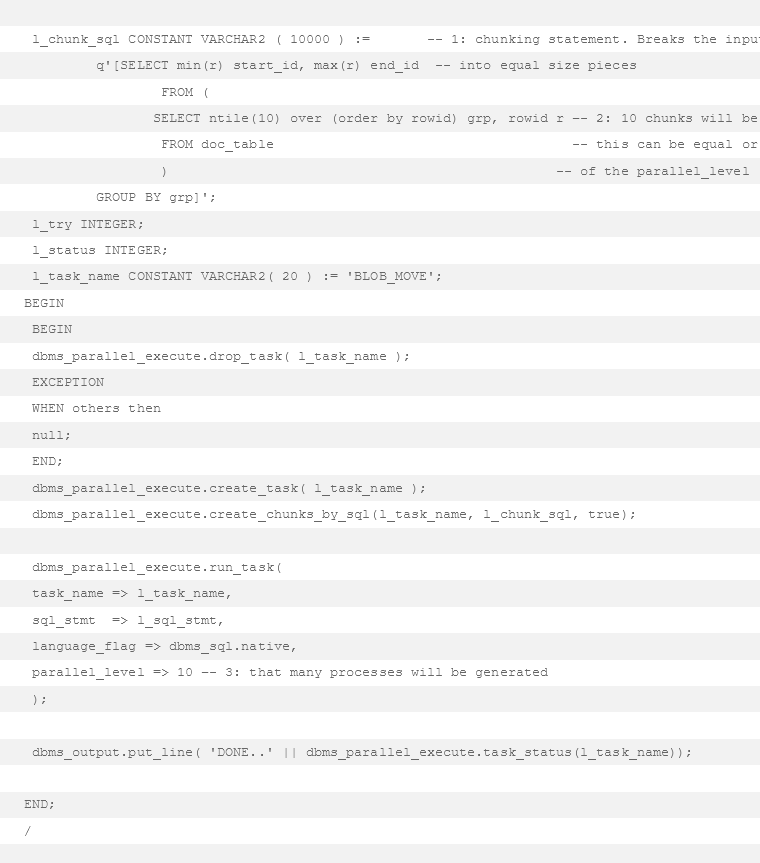

Parallelize

I could have called a stored function via parallel SQL in order to parallelize the approach. The decision to use DBMS_PARALLEL_EXECUTE was rather by instinct. There are some objective points for PL/SQL parallelization, however. E.g. a stored function would have resulted in many context switches. DBMS_PARALLEL_EXECUTE allows you to stay in PL / SQL. In addition, the code below will also work with Standard Edition.

I also did some tests with parallel SQL and functions, but never got them to scale. I would not exclude that there is a better approach than the one I present here. However, compared to the alternatives I have seen I rather like the approach presented below.

Here is the code for parallelization, I highlighted the anonymous block that we discussed in the previous paragraph.

Annotations:

There are several ways to divide the work. The chunking query is the most flexible. The search conditions here must also be found again in cursor c1.

Ntile (10) means that the result of the query will hopefully be divided into 10 equal sections.

References:

Marek Läll, binary/textual data insert into BLOB/CLOB columns – use 50% less database (IO) resources, Marek Läll / Technical blog about Oracle database: binary/textual data insert into BLOB/CLOB columns – use 50% less database (IO) resources (mareklall.blogspot.com)

Mag. Lothar Flatz, Senior Principal Consultant @DBConcepts.

Unser Oracle Mastermind kam 1989 erstmals mit Oracle in Berührung. Mittlerweile verfügt er über enormes Wissen und Erfahrung (15 Jahre als Oracle Mitarbeiter). Er war Mitglied in der Real World Performance Group und ist Mitglied im exklusiven Oaktable* und Oracle ACE*. Als einer der wenigen verfügt er über ein Patent zur Verbesserung des Optimizers. Er arbeitete an der Software für den LHC bei CERN in Genf mit. Sein Wissen und seine Erfahrung teilt er gerne als Autor für diverse Informatik Fachmagazine und als Sprecher bei einschlägigen Veranstaltungen (IT-Tagen, DOUG, AOUG, RMOUG, PGDay, POUG, OUGN uvm.). Aber auch als Dozent auf der TU Wien oder hier im Blog.

*Oaktable Member: 100 Mitglieder weltweit. Ausschließlich auf Einladung.

*Oracle ACE: Weltweit 500. Von Oracle für besondere Leistungen ernannt.